Setting Up The Virtual Machines to host vCloud Components:

Now that we have the all the parts of the ESX host we can get into some of the actual vCloud director requirements. First and foremost is the need to setup all the windows machines to host the basic vSphere requirements. We can do this by creating in initial Windows 2008 R2 x 64 template. From there we need the following Virtual Machines configured. I elected to use completely separate Virtual Machines for all these roles

- Domain Controller/DNS Server (VLAN 110)

- SQL 2008 (VLAN 110)

- vCenter Server (VLAN 110)

Once these machines are configured, we can move onto the required virtual machines for the VMware vCloud Director components. I don’t see the need to fully detail the install and configuration of vCenter, AD, and SQL. The Virtual Machines required for vCloud Director are the following. All of these should have IP addresses on VLAN 110 to maintain isolation of the machines from your other networks. If you chose to create VLAN routes in the core switch that will provide you access to these devices. Otherwise this will result in all of these being isolated. In my case I do have a VLAN route from the Production 100 VLAN to the 110 VLAN only for terminal services, SSH, and remote console access.

- VMware vShield Manager (Virtual Appliance downloaded from VMware)

- CentOS Virtual Machine for Oracle

- CentOS Virtual Machine for vCD Cell Service

- Windows 2008 R2 x64 Virtual Machine for Chargeback 1.5

- Two Virtual Machines to run ESXi as Nested Virtual Machines.

I will not go over the installation of vCD or it’s components because those are available in the VMware vCloud Director Documentation on the VMware website. I will go into a few key configurations of the Virtual ESXi servers as there is a few quirks to be aware of.

Setting Up The Virtual Machines to host vCloud Components:

ESXi 4.1 by default does not support a Guest Operating system OF ESX, so in order to make that work we need to create a special .VMX file to support the installation of an ESXi virtual machine. Below are the steps to create the correctly configured virtual machiens to run ESXi. Generally these steps are outlined on other sites but this is the minimum needed to not only install ESXi, but to actuall allow a NESTED virtual machine to run on them. These two ESX hosts will become the vCD “Compute” cluster that you will point vCD to for resources.

Step #1:

- Initially use “Linux Other 64-Bit” as the Guest OS type

- 2 vCPU and 3GB or RAM each

- 20GB Virtual Disk (Thin Disk)

- 6 Network Adapters all on VLAN 4095 (Virtual ESX Trunk) port group originally configured

- Install ESXi into the Guest VM

- Configure the management network on VLAN 110 (Same as all the

- Configure the root password

- Confirm connectivity

- If Promiscuous mode was not set on vSwitch) of the PHYSICAL host this may not work properly

- Edit the VMX file to support booting Virtual Machines on these Virtual ESX hosts

- Shut down the VM and remove from inventory

- Connect to the Physical ESX host to edit the .vmx file of each Virtual ESX host

- Edit the following two lines to read as the following however you CANNOT power up a 64-bit Guest only 32-bit Guests:

- guestOS = “vmkernel”

- guestOSAltName = “VMware ESX 4.1”

- Re-register the Virtual ESX VMs

- Start up the Virtual ESX hosts

Step #2:

- Add the host names to the AD DNS server as A-Records

- Create a new Datacenter in vCenter for vCloud

- Create a new Cluster

- Add the two Virtual ESX hosts to the cluster

Step #3:

- Make sure VMNIC0 and VMNIC1 are on vSwitch0

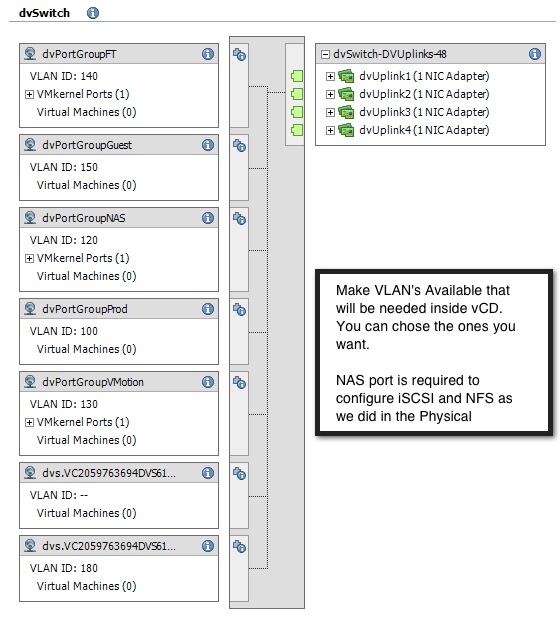

- The other four will be used on a Distributed Virtual Switch shown below.

- All the NICs should be on the “Virtual ESX Trunk” Port group

- Only configure “Management” on vSwitch0

Chris Colotti's Blog Thoughts and Theories About…

Chris Colotti's Blog Thoughts and Theories About…

Very nice write-up. I’ll be doing something very similar soon and this will come in quite handy for sure.

Chris,

i’m going to buy some equipment to build my home lab.. I’m looking at motherboards that have dual socket should i spend the extra bucks for the second processor? My work loads will be vCloud director, Capacity IQ, SQL, ORACLE, vmware view and file server(and some VDI machines for the wife and kid). I also want to use this setup to demo products ad hoc for customer… thoughts?

If I were to do it again I would have gone dual socket, only because the operations on all the nested ESXi servers for imports and VM deployments has started to spike up CPU. However when those operations are done it drops done but consistently runs 25% on the single socket with all the VMs running at idle

Can you post on how you sized your VMs? (oracle, vESX, etc)

Yes I can do that in a couple weeks. I owe an update to this since I added N-1 in my lab to maintain vCD 1.0.1 and 1.5 plus I added vCO, etc. So I have had on my to do list getting an updated post out 🙂

Chris